Fri, 15 Apr 2005

Copying a Crapload of Files is Hard

I was recently faced with what turned out to be an unexpectedly difficult task. I had to copy about 700GB of data from one filesystem to another. The data was comprised of the backups of about 40 servers as stored by BackupPC. BackupPC is a great piece of software for backing up a network of servers. It can backup machines using SMB, rsync, and tar over rsh/ssh. It compresses the backed up files and pools them so multiple copies of the same files are stored as hardlinks to the compressed files in the pool.

In order to increase the storage capacity of the backup server, I was tasked with adding a second RAID array. I set up an LVM volume on the new array, and then needed to copy all of the files from the old array to the new one.

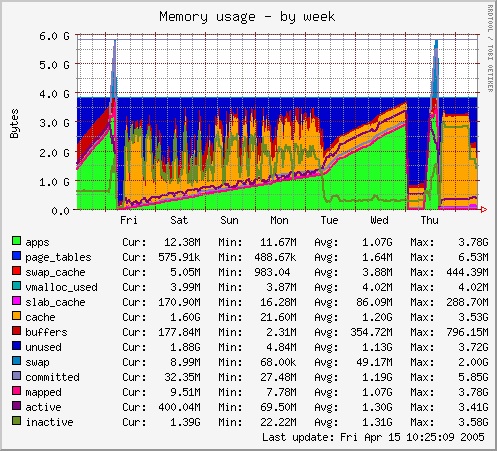

First, I started up rsync and let it go. For a long time, the rsync process just kept growing and growing. Before it starts copying any files, it goes through all of the files to figure out what it's going to copy. A known limitation of rsync is that it uses a lot of memory when there are a lot of files involved. I didn't know how many files we had, but with 4GB of RAM in the machine, I was pretty confident that we would be ok. After more than 24 hours, before it had started actually copying any files, rsync died as it hit the 3GB per-process memory limit.

For my next attempt, I used cp -a. cp started out alright.

Throughput from source volume was pretty abysmal, but after about 5 days, the

entire pool of compressed files had been copied. As it was copying the

individual servers' backup directories (which contain the hardlinks to the

pool), cp also hit the 3GB limit and died.

I briefly thought about using sgp_dd to do a lower level copy of the data, but we really wanted to use XFS for the new filesystem; the original filesystem was EXT3. I didn't confirm whether it would work with LVM volumes either.

I ended up just starting with an empty BackupPC directory. We'll keep the old backups for a while until we have sufficient history. Then, we'll add the old array to the new volume for additional disk space.

An interesting project might be to add an optional argument to rsync to allow it to use a dbm file to maintain state rather than keeping everything in memory. I guess nobody anticipated terabyte-sized disk volumes being used with 32-bit processors.